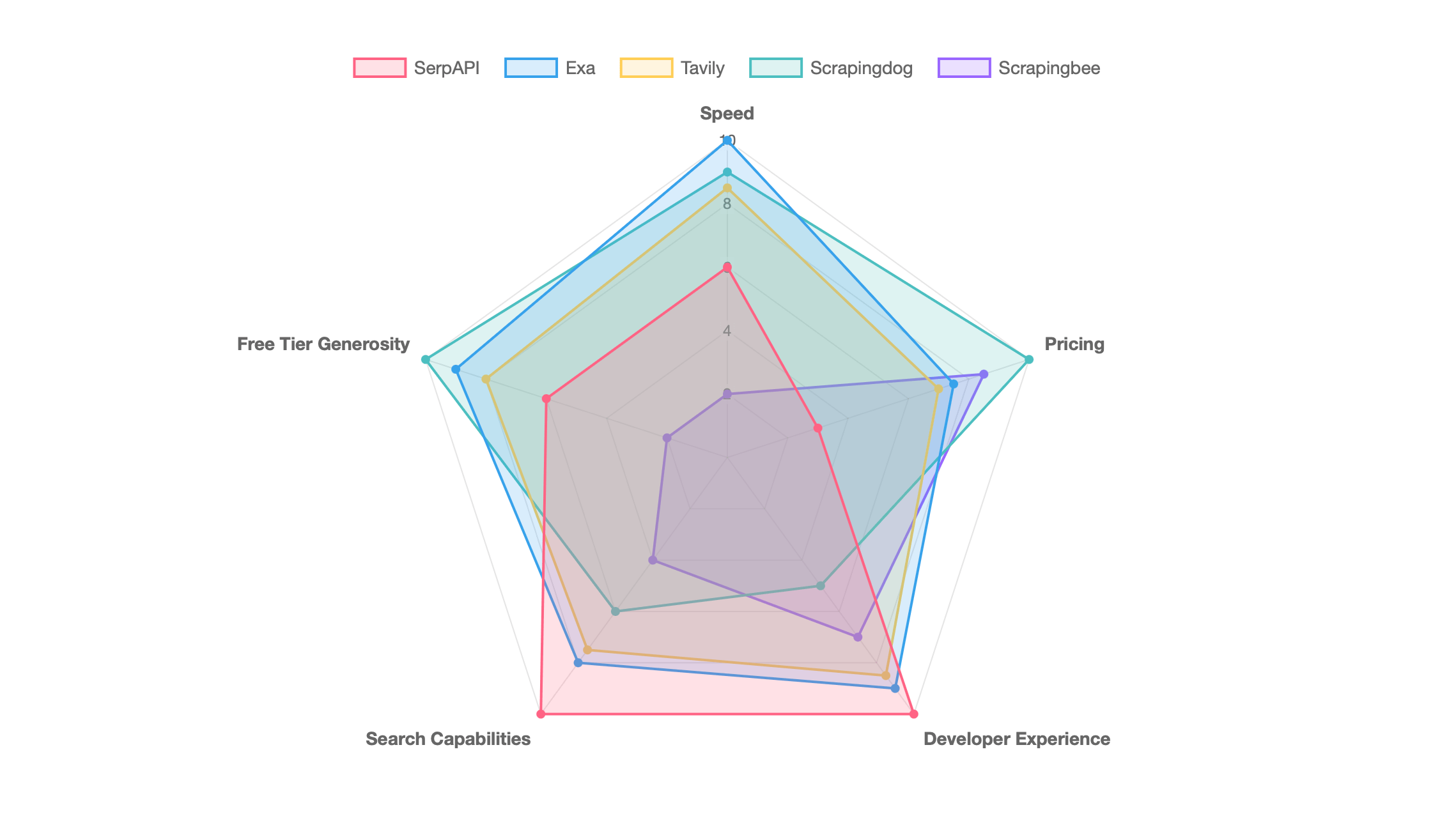

Best SERP API comparison 2025: SerpAPI vs Exa vs Tavily vs ScrapingDog vs ScrapingBee

We tested five SERP APIs with standardized benchmarks to find which performs best for different use cases. Exa delivered the fastest responses at 1.180 seconds with semantic search over a proprietary index at $5 per 1,000 queries. Tavily aggregates and parses content for LLM applications. Scrapingdog offers the lowest per-request pricing at under $0.001 at scale. SerpAPI provides the broadest coverage with 20+ search engines. Scrapingbee bundles SERP access with general web scraping.

This comparison helps you choose a SERP API for SEO monitoring, AI agents, RAG applications, or price comparison tools. Each section includes benchmark data, pricing breakdowns, and code examples.

Overview comparison

We tested five SERP APIs using this Python benchmark script that measured response time, success rate, cost, and free tier across 50 real-world queries.

| API | Avg response time | Success rate | Cost per 1,000 Searches | Free tier |

|---|---|---|---|---|

| Exa | 1.180s | 100% | $5 | 2,000 one-time |

| Scrapingdog | 1.700s | 100% | $0.20-$0.06 | 1,000+ one-time |

| Tavily | 1.885s | 100% | $5-$8 | 1,000/month |

| SerpAPI | 2.972s | 100% | $15 | 250/month |

| Scrapingbee | 7.257s | 100% | $0.56-$1.44 | 66 one-time |

Response times vary based on what each API delivers: SerpAPI returns raw SERP data, Exa performs semantic search with embeddings generation, and Tavily aggregates content from multiple sources with AI ranking. These tests used free tiers, but some providers offer faster speeds for additional cost (for example, SerpAPI's Ludicrous Speed tiers claim 2.2x faster responses).

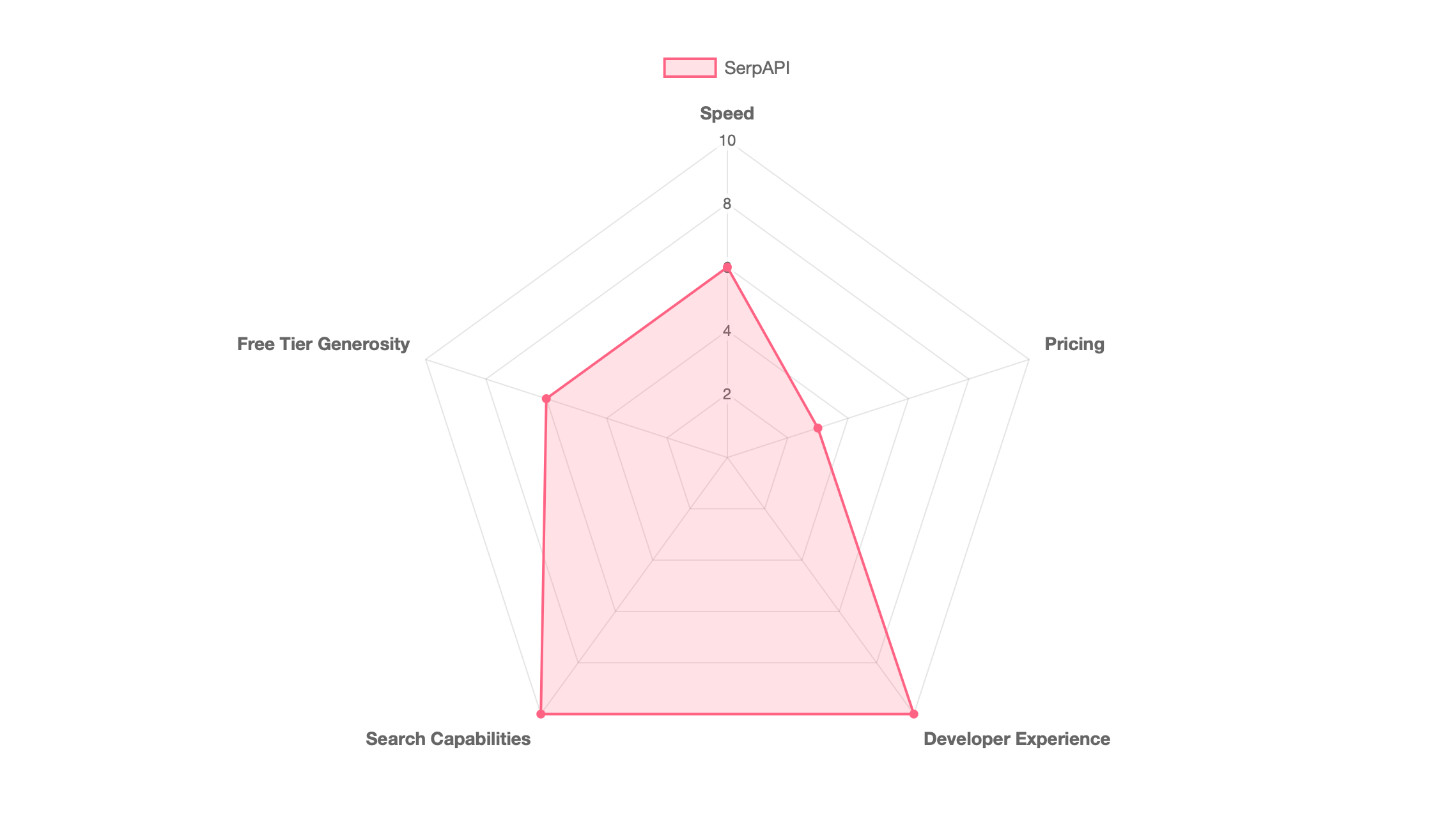

SerpAPI

SerpAPI scrapes 20+ search engines and returns structured JSON. The service handles CAPTCHA solving and proxy rotation automatically, so you make a request and get back parsed data without dealing with blocks or rate limits.

Speed

Our 50-query benchmark measured 2.972s average response time with 100% success rate. Response times ranged from 0.070s to 13.308s, showing high variability. The free tier uses "Best Effort" speed with automatic refunds for failed searches. Paid tiers offer Ludicrous Speed claiming 2.2x faster responses for time-sensitive applications. SerpAPI's status page reports 99.911% uptime over the last 30 days.

Speed score (6.0/10): Mid-tier performance at 2.972s average places SerpAPI fourth out of five tested APIs, though the wide variance suggests some queries complete in milliseconds while others take 10+ seconds.

Pricing

SerpAPI's pricing uses monthly subscriptions based on search volume. Plans start at $75/month for 5,000 searches, which works out to $15 per 1,000 searches or $0.015 per search. Production tier and above include U.S. Legal Shield, providing legal defense for crawling public search data under First Amendment rights.

Pricing score (3.0/10): At $15 per 1,000 searches, SerpAPI costs 3-15x more than alternatives like Scrapingdog ($0.20) or Exa ($5), earning the lowest pricing score among tested APIs.

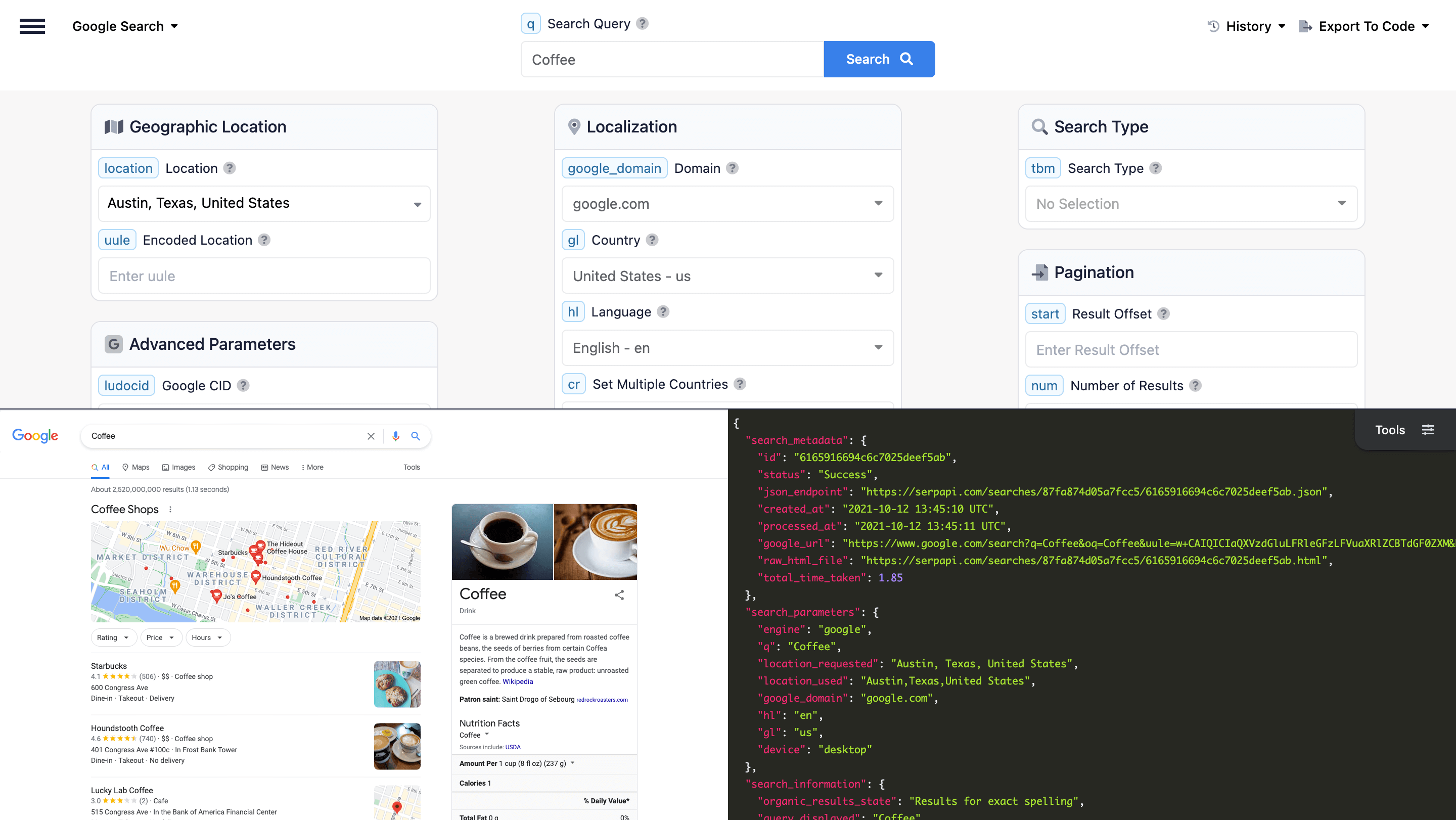

Developer experience

SerpAPI provides SDKs for Ruby, Python, JavaScript, Golang, PHP, Java, Rust, and .NET. Setup takes about 5 minutes:

from serpapi import GoogleSearch

params = {

"q": "coffee",

"location": "Austin, Texas",

"api_key": "your_api_key"

}

search = GoogleSearch(params)

results = search.get_dict()

for result in results["organic_results"]:

print(result["title"])

SerpAPI's Playground lets you test queries through a web interface before writing code. You modify parameters through form controls and see both the rendered SERP and JSON response. The dashboard tracks your usage with response time graphs and search history.

Developer experience score (10.0/10): Eight official SDKs, an interactive playground, comprehensive documentation, and real-time usage dashboards make SerpAPI the strongest developer experience among tested APIs.

Search capabilities

SerpAPI covers Google (including AI Mode and AI Overview), Bing, Yahoo, Baidu, DuckDuckGo, Yandex, Amazon, eBay, Walmart, Home Depot, YouTube, Google Scholar, Google Patents, TripAdvisor, and Yelp. Each platform has its own API endpoint with consistent JSON formatting. Most competitors support 3-5 search engines at most.

Search capabilities score (10.0/10): Coverage of 20+ search engines and specialized platforms far exceeds any competitor, making SerpAPI the only choice for applications needing multi-engine support.

Free tier generosity

The free tier provides 250 searches per month with no credit card required. All API endpoints work on the free tier, though you're limited to Best Effort speed without access to Ludicrous Speed tiers. Failed searches receive automatic refunds, so the 250-search limit only counts successful requests.

Free tier score (6.0/10): The 250 searches per month places SerpAPI mid-tier, more generous than Scrapingbee (66 total) but less than Tavily (1,000/month), Exa (2,000 one-time), or Scrapingdog (11,000 with promotions).

Choose SerpAPI when you need breadth of coverage

SerpAPI works best when you need access to multiple search engines or specialized platforms through one provider. SEO tools monitoring rankings across Google, Bing, and Yahoo avoid maintaining separate integrations. E-commerce price monitoring pulls structured data from Amazon, Walmart, and eBay. Academic research platforms integrate Google Scholar without parsing citation formats. The $15 per 1,000 searches pays for itself when infrastructure complexity is the bottleneck.

Skip SerpAPI if you only need Google search on a tight budget. At $0.015 per search, you're paying 3-15x more than alternatives. AI applications requiring semantic search or embeddings should use Exa or Tavily instead.

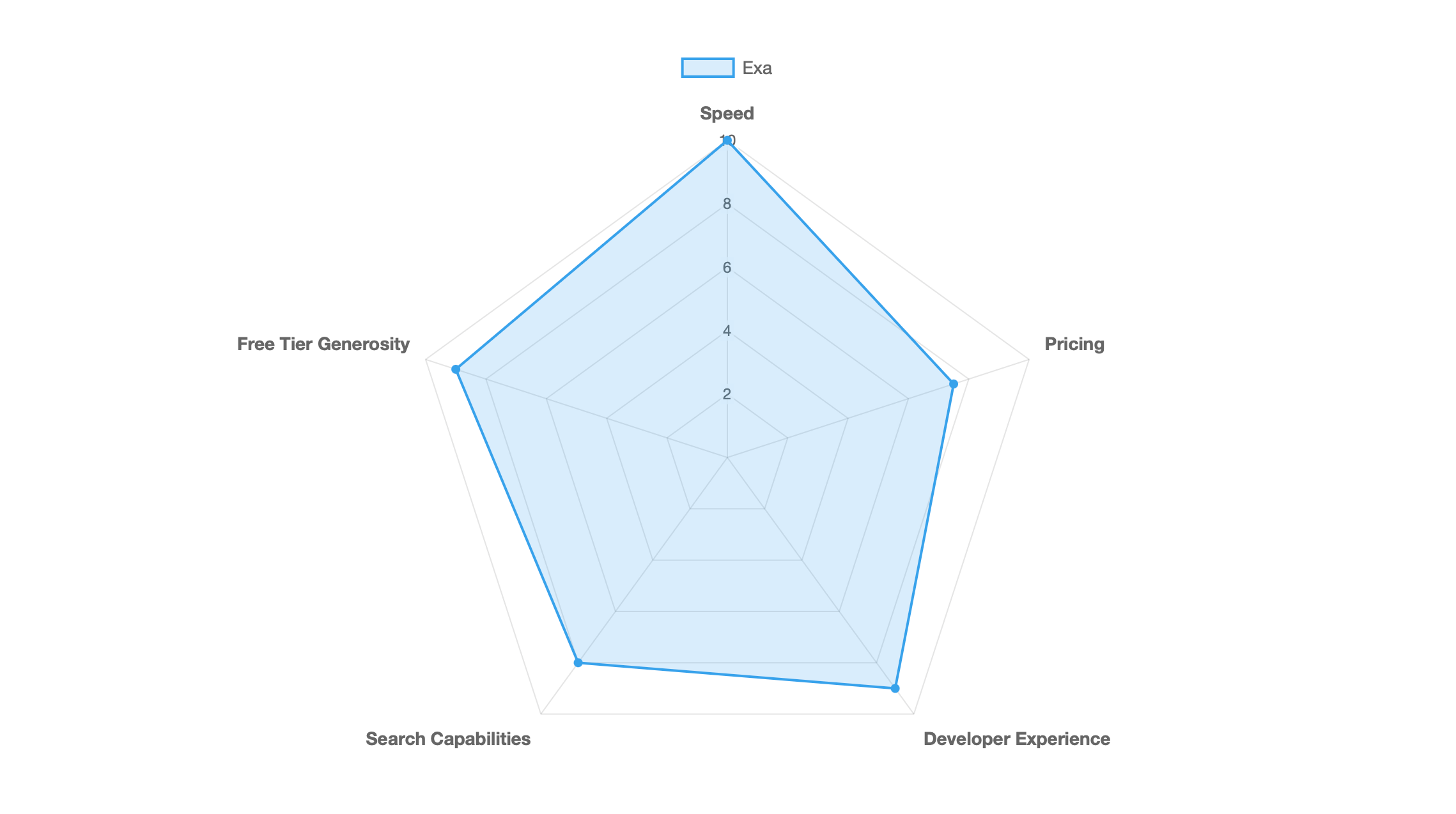

Exa

Exa is a search API built for AI applications using a proprietary embeddings-based index. Instead of scraping Google or Bing, Exa maintains its own crawl optimized for semantic search.

Speed

Our 50-query benchmark measured 1.180s average response time with 100% success rate. Response times ranged from 1.003s to 2.120s. Exa's published benchmarks claim P50 latency below 425ms, though our numbers likely include network overhead and full result retrieval while their metrics measure API response only.

Speed score (10.0/10): At 1.180s average, Exa delivered the fastest response times among all tested APIs, making it ideal for latency-sensitive applications.

Pricing

Exa's pricing charges $5 per 1,000 searches for 1-25 results ($0.005 per search). Requesting 26-100 results jumps to $25 per 1,000 searches ($0.025 per search), a 5x increase. Additional endpoints cost separately: contents retrieval runs $1 per 1,000 pages, answers and research tasks run $5 per 1,000 calls.

Pricing score (7.5/10): At $5 per 1,000 searches, Exa sits in the affordable mid-tier, significantly cheaper than SerpAPI ($15) but more expensive than Scrapingdog ($0.20).

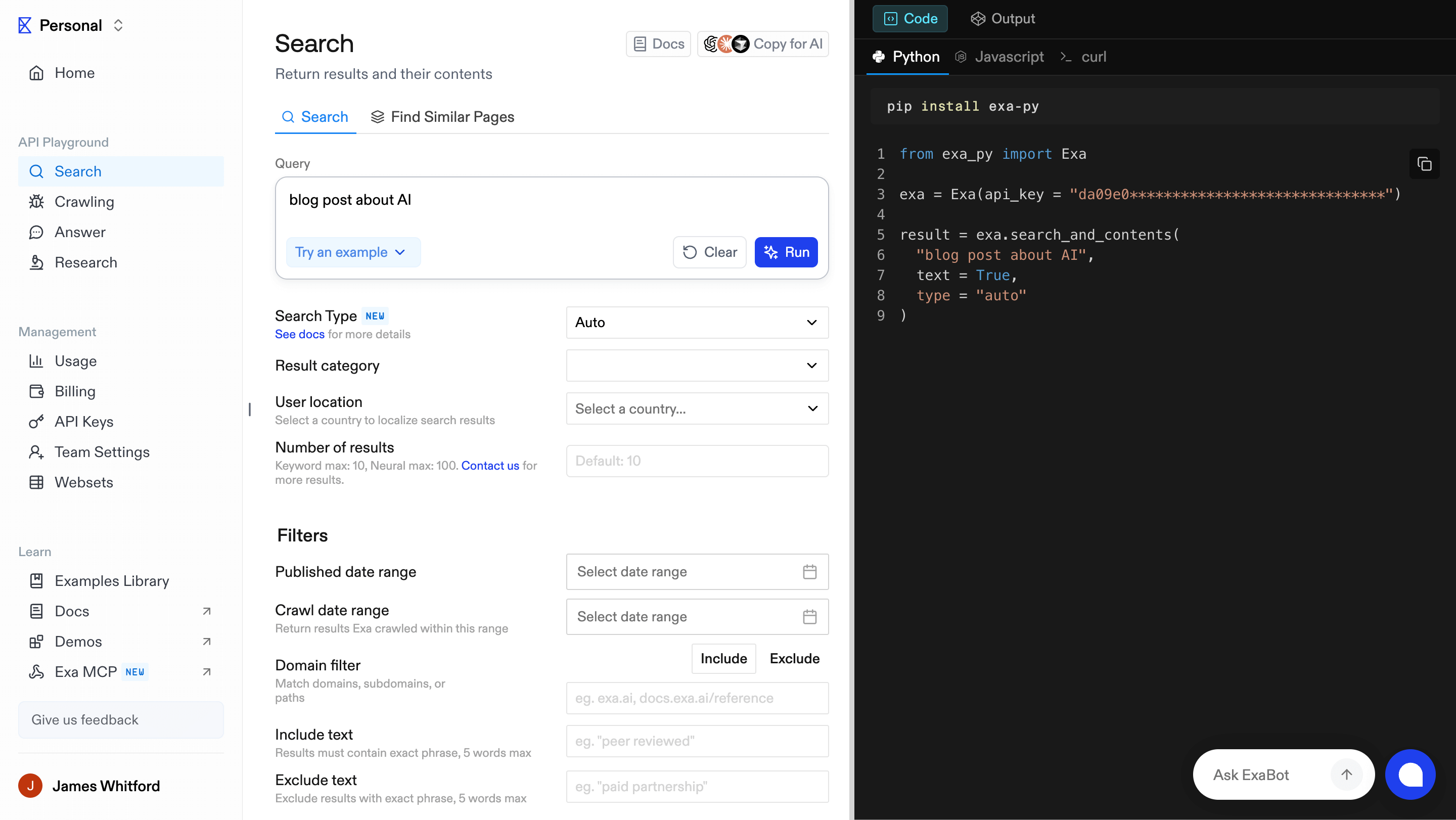

Developer experience

Exa provides SDKs for Python (exa-py) and JavaScript (exa-js), both with full TypeScript definitions. Setup takes about 5 minutes:

from exa_py import Exa

exa = Exa(api_key="your-api-key")

results = exa.search("coffee")

for result in results.results:

print(result.title)

The Playground lets you test queries with controls for search type, filters, and AI features while generating code snippets. Documentation includes integration examples for LangChain, LlamaIndex, CrewAI, Anthropic, and OpenAI, plus an MCP integration for Cursor.

Developer experience score (9.0/10): Two official SDKs with TypeScript support, interactive playground, comprehensive docs, and MCP integration provide excellent developer experience, just slightly behind SerpAPI's eight-language SDK coverage.

Search capabilities

Exa maintains a proprietary search index built for AI applications rather than scraping Google or Bing. The embeddings-based index covers web content, research papers, news, GitHub repositories, tweets, and PDFs with category filters and date filtering. The API provides multiple search modes (neural for semantic matching, keyword for traditional results, auto for intelligent combination) plus endpoints for content retrieval, similarity search, and automated research.

Search capabilities score (8.0/10): Proprietary AI-powered semantic search offers unique capabilities for meaning-based queries, though it can't replicate specific search engine results like SerpAPI.

Free tier generosity

The free tier provides $10 in credits with no expiration and no credit card requirement. At $5 per 1,000 searches for standard queries (1-25 results), this translates to 2,000 free searches. You can allocate credits across other endpoints (research/answers at 2,000 calls, content retrieval at 10,000 pages). All features work on the free tier including semantic search, similarity search, and AI-powered summaries.

Free tier score (9.0/10): 2,000 one-time free searches with no expiration places Exa among the most generous free tiers, second only to Scrapingdog's 11,000 credits.

Choose Exa when you need semantic search

Exa works best for AI agents, RAG systems, or LLM-powered research tools that benefit from meaning-based search. Voice AI companions leverage sub-2-second response times for fast web retrieval. Research platforms use the structured research endpoint to generate reports with citations. Chatbots get clean, parsed HTML without dealing with CAPTCHA.

Skip Exa if you need exact Google SERP replication for SEO monitoring or Google Shopping data for price comparison. Watch the 5x price jump when requesting 26-100 results per query ($0.025 per search approaches premium pricing without multi-engine coverage).

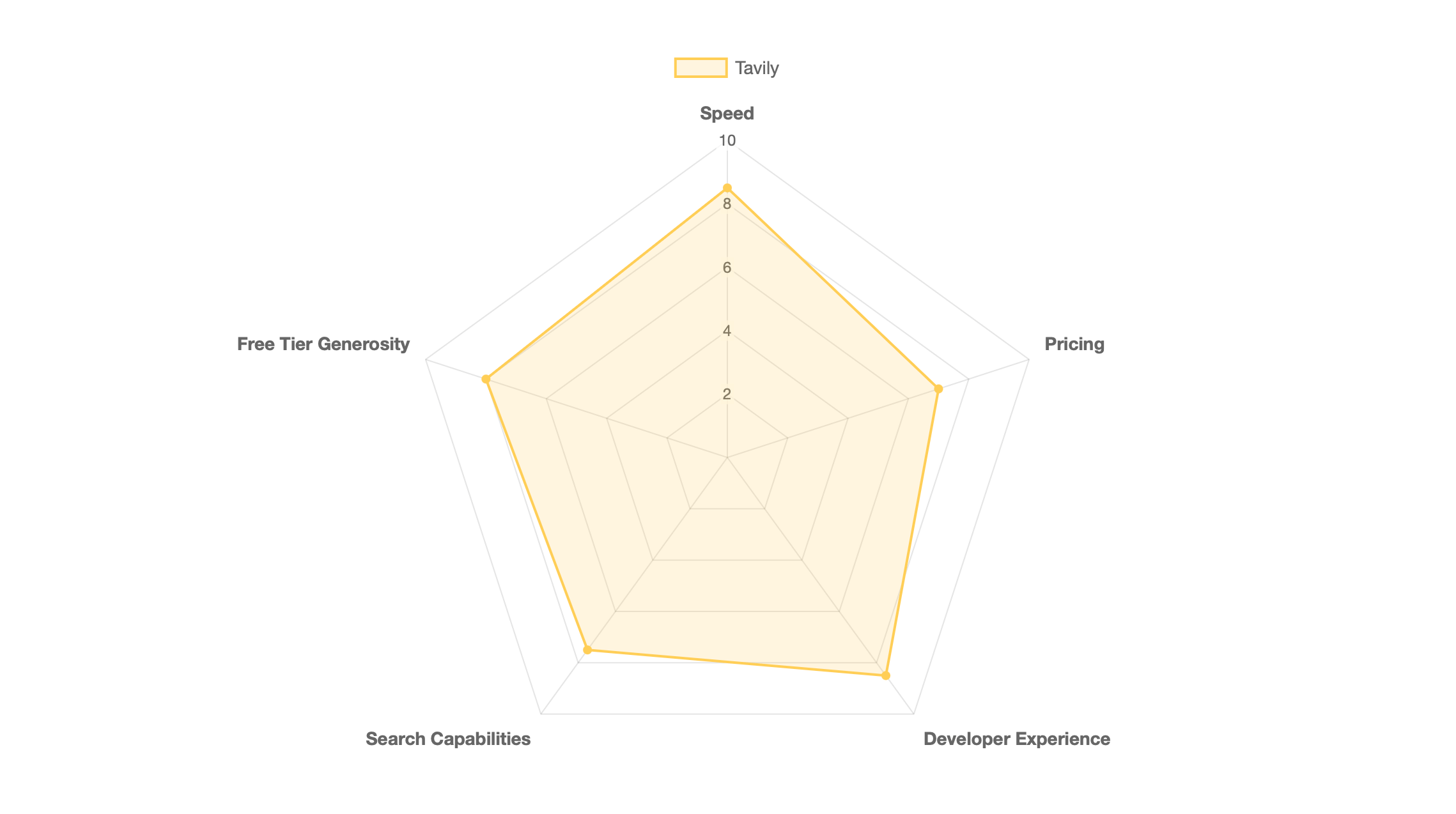

Tavily

Tavily is a search API optimized for LLMs and RAG applications. Instead of returning URLs with snippets, Tavily aggregates content from up to 20 sources per query, ranks them using proprietary AI, and delivers parsed content ready for LLM consumption.

Speed

Our 50-query benchmark measured 1.885s average response time with 100% success rate. Response times ranged from 1.583s to 2.897s. The response time reflects that Tavily fetches, parses, and ranks content before responding, while traditional SERP APIs return URLs and leave content extraction to you. Tavily's published benchmarks focus on accuracy (93.3% on SimpleQA) rather than raw speed.

Speed score (8.5/10): At 1.885s average, Tavily ranks third among tested APIs, delivering strong performance considering it aggregates and parses content from multiple sources.

Pricing

Tavily's pricing uses a credit-based system. Pay-as-you-go charges $0.008 per credit. Basic search consumes 1 credit per request ($8 per 1,000 searches), while advanced search uses 2 credits. Subscription tiers start at $30/month for 4,000 credits ($0.0075 per credit) and scale down to $0.005 per credit on the Growth plan.

Pricing score (7.0/10): At $8 per 1,000 searches on pay-as-you-go, Tavily offers mid-tier pricing between premium options like SerpAPI ($15) and budget options like Scrapingdog ($0.20).

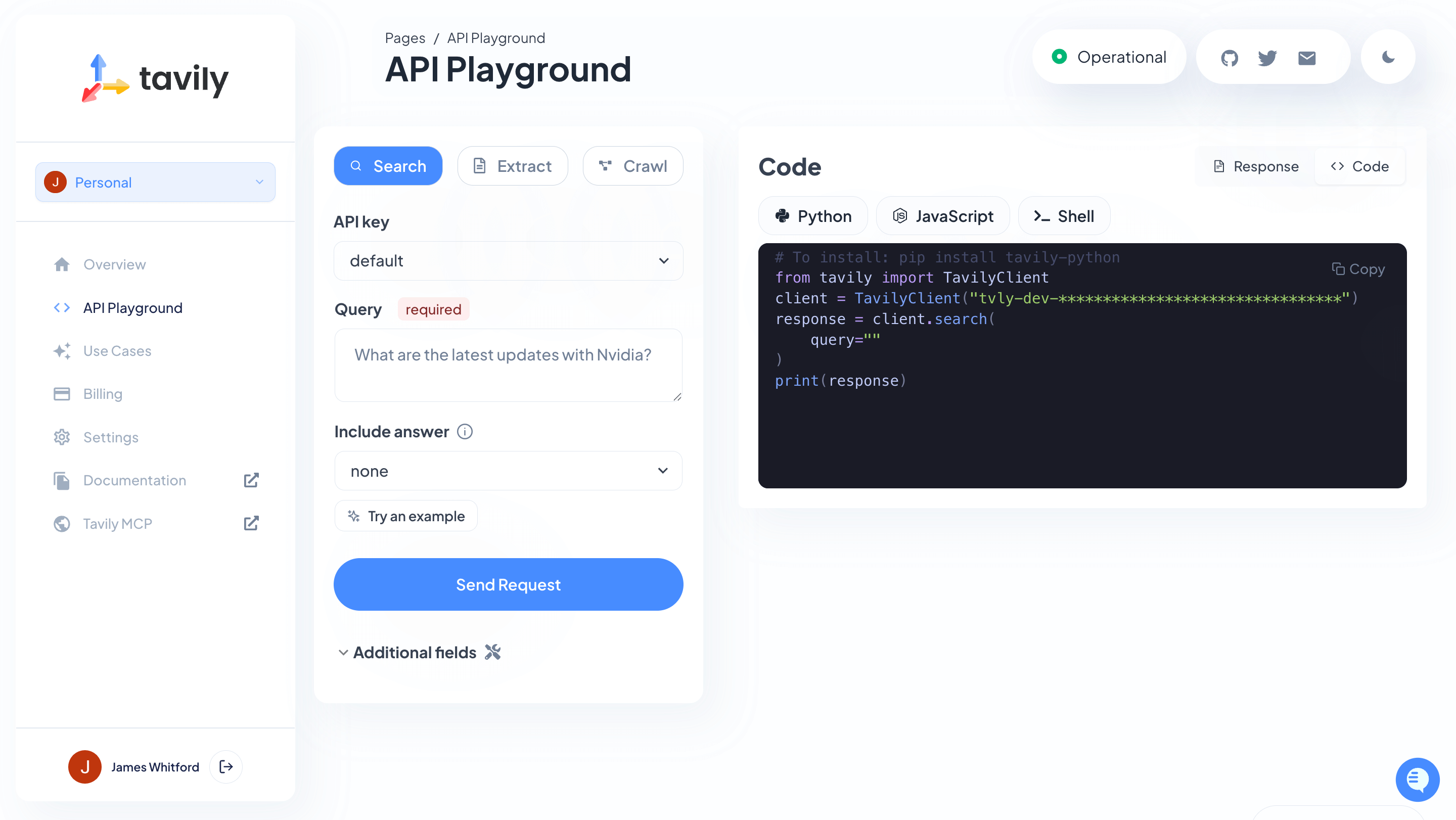

Developer experience

Tavily provides SDKs for Python (tavily-python) and JavaScript/TypeScript (@tavily/core). Setup takes about 5 minutes:

from tavily import TavilyClient

client = TavilyClient(api_key="tvly-YOUR_API_KEY")

response = client.search("coffee")

for result in response["results"]:

print(result["title"])

Tavily's Playground generates real-time code as you build queries. Adjust parameters like search depth and result count, and the right panel updates with Python, JavaScript, or cURL code. Documentation includes integration guides for LangChain, LlamaIndex, OpenAI, Anthropic, Vercel AI, and Zapier.

Developer experience score (8.5/10): Two official SDKs, real-time code generation in the playground, comprehensive integration guides, and MCP support provide strong developer experience, comparable to Exa.

Search capabilities

Tavily uses proprietary AI ranking to aggregate content from up to 20 sources per query. The API provides four endpoints: search (factual queries with AI ranking), extract (clean content from URLs), map (page discovery), and crawl (combined mapping and extraction). Search supports topic filters (general, news, finance), time range filters (day, week, month, year), and domain inclusion/exclusion.

Search capabilities score (7.5/10): AI-powered content aggregation with 93.3% accuracy on SimpleQA benchmarks provides strong factual search, though it can't replicate specific search engine results like SerpAPI or offer semantic search like Exa.

Free tier generosity

The free tier provides 1,000 credits per month with no credit card requirement. At 1 credit per basic search, this translates to 1,000 free searches monthly. You can allocate credits across advanced searches (2 credits), content extraction (1 credit per 5 URLs), or mapping (1 credit per 10 pages). All features work on the free tier with 100 requests per minute rate limiting.

Free tier score (8.0/10): 1,000 recurring monthly credits places Tavily among the more generous free tiers, better than SerpAPI (250/month) or Scrapingbee (66 total) but less than Exa (2,000 one-time) or Scrapingdog (11,000).

Choose Tavily for AI-optimized search

Tavily fits RAG applications, AI agents, or LLM-powered assistants that need factual, grounded web information. Chatbots get ranked content with source citations, research automation tools use the crawl endpoint to map entire domains, and content aggregation platforms pull clean text without handling CAPTCHA. The 93.3% accuracy on factual benchmarks and automatic content filtering reduce manual validation needs.

Skip Tavily for exact Google or Bing SERP layouts needed in SEO monitoring or structured shopping results for price comparison. Applications needing only URLs without content extraction should consider lighter-weight SERP APIs.

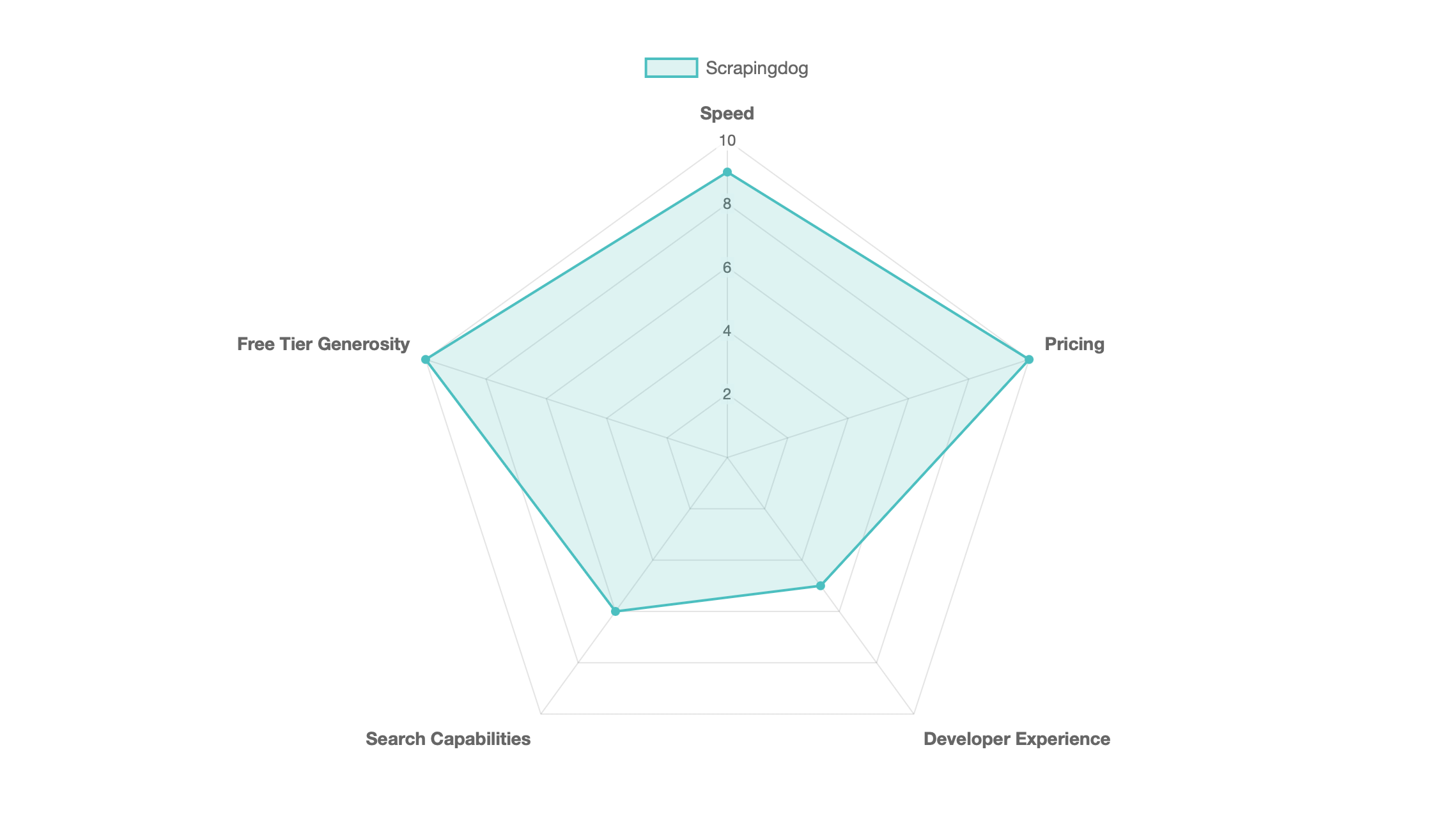

Scrapingdog

Scrapingdog is a web scraping service offering SERP API access alongside scrapers for Amazon, LinkedIn Jobs, Instagram, and other platforms. The service provides a Google Search API and a Universal Search API that aggregates and de-duplicates results across Google, Bing, Yahoo, and DuckDuckGo.

Speed

Our 50-query benchmark measured 1.700s average response time with 100% success rate. Response times ranged from 0.709s to 4.432s. The measured performance contradicts Scrapingdog's published benchmarks, which claim 1.25 second response times for Google SERP requests, though our 1.700s average is reasonably close.

Speed score (9.0/10): At 1.700s average, Scrapingdog ranks second among tested APIs, delivering strong performance at the lowest price point.

Pricing

Scrapingdog's pricing uses a credit-based system with monthly subscriptions. Plans start at $40/month for 200,000 credits (Light) and scale to $30,000/month for 1 billion credits (Nova Pro). At 1 credit per Google search, the cost per 1,000 searches ranges from $0.20 (Light) to under $0.06 at high-volume tiers.

Pricing score (10.0/10): At $0.20-$0.06 per 1,000 searches, Scrapingdog offers the cheapest pricing among all tested APIs, making it ideal for high-volume projects.

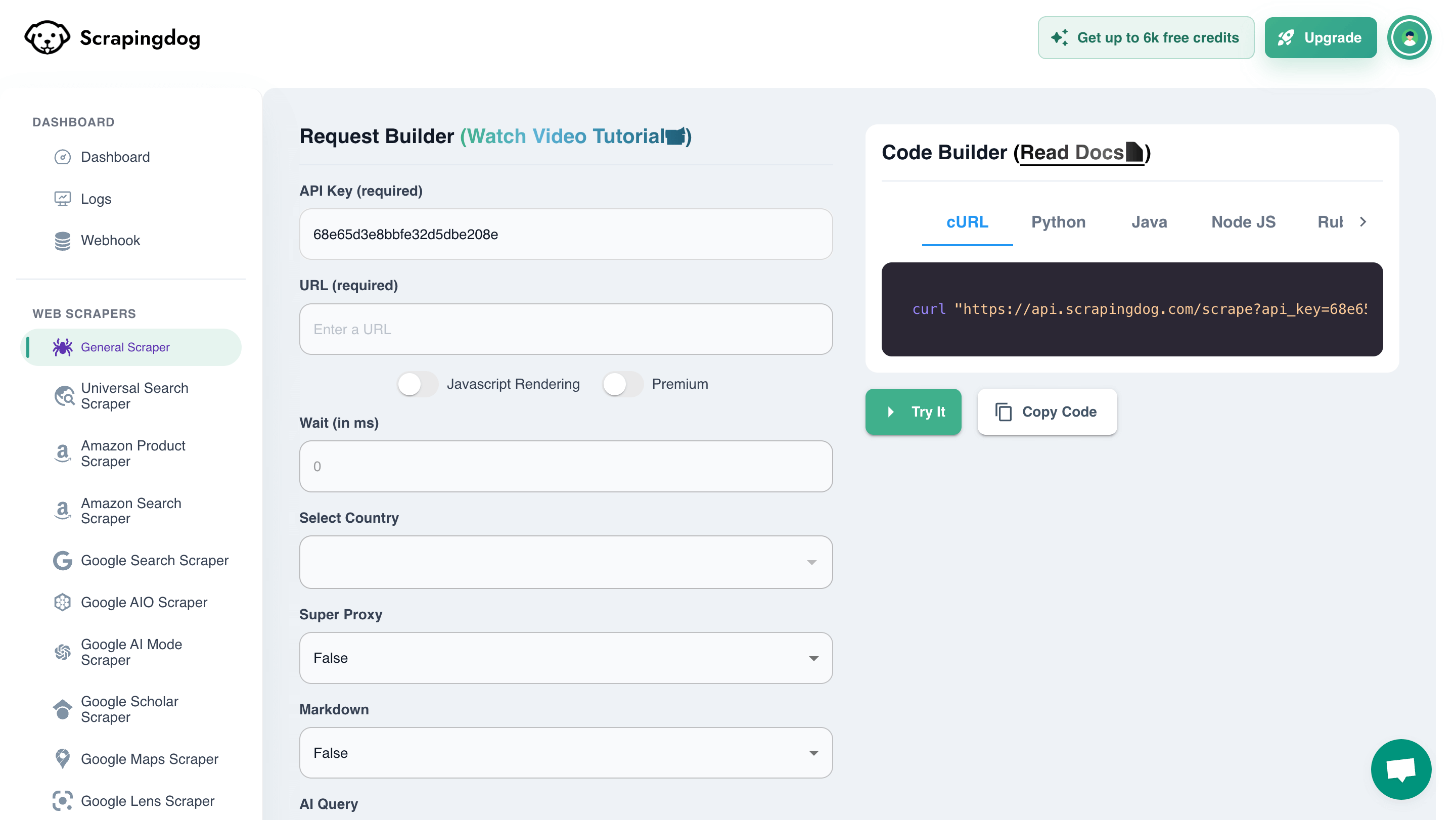

Developer experience

Scrapingdog's documentation covers authentication, error codes, and endpoint references, though some pages returned 404 errors during testing. The service lacks official SDKs with helper functions found in competing APIs, requiring direct REST API calls using standard HTTP libraries. The dashboard's request builder generates code in cURL, Python, Java, Node.js, Ruby, PHP, Go, and C# as you configure parameters.

Setup takes approximately 5-10 minutes:

import requests

url = "https://api.scrapingdog.com/google"

params = {

"api_key": "YOUR_API_KEY",

"query": "coffee",

"results": 10

}

response = requests.get(url, params=params)

data = response.json()

for result in data.get("organic_results", []):

print(result["title"])

Developer experience score (5.0/10): No official SDKs, documentation gaps with 404 errors, and REST-only integration give Scrapingdog the weakest developer experience among tested APIs.

Search capabilities

Scrapingdog's Google Search API provides access to Google web search with organic results, People Also Ask sections, related searches, and multiple search types (web, images, news, shopping). The Universal Search API aggregates and de-duplicates results from Google, Bing, Yahoo, and DuckDuckGo in a single request. Beyond search engines, Scrapingdog provides dedicated scrapers for Amazon, Walmart, eBay, LinkedIn Jobs, Instagram, and other platforms.

Search capabilities score (6.0/10): Coverage of 4 search engines via Universal Search API with de-duplication provides decent breadth, though significantly less than SerpAPI's 20+ platforms.

Free tier generosity

The free tier provides 1,000 request credits with no expiration and no credit card requirement. Optional social media promotions add 4,000 credits for following on X and 6,000 for posting a LinkedIn review, bringing the total to 11,000 credits. Free tier users access all API endpoints including the Universal Search API, JavaScript rendering, geo-targeting, and AI extraction features.

Free tier score (10.0/10): 11,000 total free credits (with promotions) is the most generous free tier among all tested APIs, ideal for extensive evaluation and small projects.

Choose Scrapingdog for cost-optimized scraping

Scrapingdog fits high-volume projects where per-request cost matters more than response time. Data collection pipelines requiring millions of monthly searches benefit from pricing that drops to $0.00006 per request at scale. Lead generation tools can consolidate Google search results alongside LinkedIn profiles, Instagram accounts, or e-commerce platforms through a single provider. The Universal Search API's de-duplication feature benefits SEO tools comparing rankings across multiple search engines without manual result matching.

Skip Scrapingdog for projects requiring official SDKs and comprehensive documentation. The REST-only API and documentation gaps observed during testing make integration more challenging than competitors with polished SDKs.

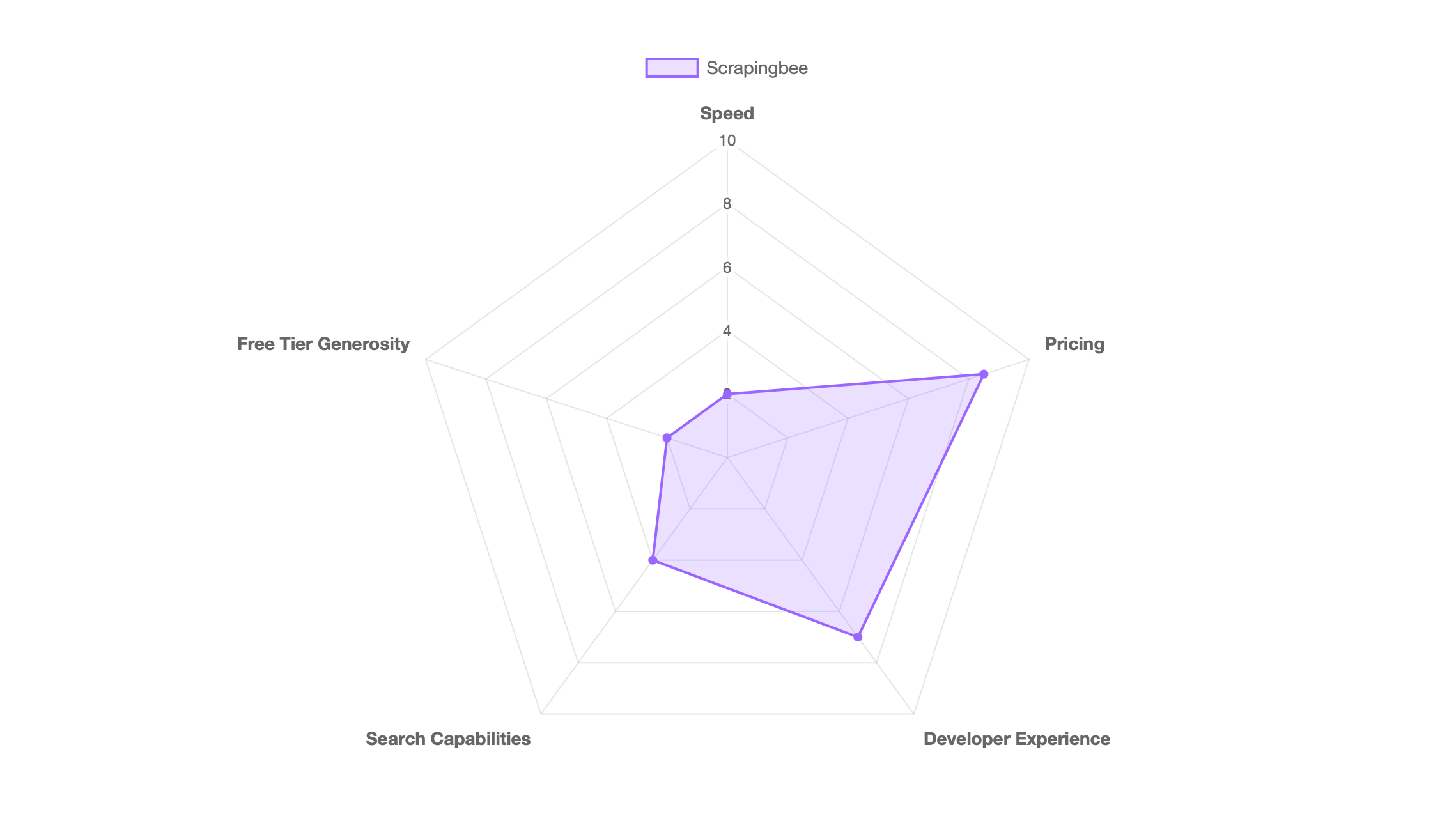

Scrapingbee

Scrapingbee is a general-purpose web scraping service that includes Google Search API functionality. Acquired by Oxylabs in June 2025, it targets developers needing headless browser rendering, JavaScript execution, and proxy rotation across multiple websites including e-commerce platforms, social media sites, and search engines.

Speed

Our 50-query benchmark measured 7.257s average response time with 100% success rate. Response times ranged from 1.453s to 22.978s, creating a 16x performance spread that makes response time prediction difficult. Competitor benchmarks from Scrapingdog show 90% success rate with 16.28 second average response time for Google SERP requests, confirming high variability.

Speed score (2.0/10): At 7.257s average, Scrapingbee delivered the slowest response times among all tested APIs, with extreme variability reflecting its positioning as a general web scraper rather than SERP-optimized service.

Pricing

Scrapingbee's pricing uses a credit-based system where Google Search API requests cost 15 credits per call. Monthly plans start at $49.99 for 250,000 credits. For 1,000 Google searches at 15 credits per request, costs range from $0.56 to $1.44 depending on tier. Variable credit costs create billing unpredictability as enabling premium proxies increases request cost from 5 to 25 credits (5x multiplier).

Pricing score (8.5/10): At $0.56-$1.44 per 1,000 searches, Scrapingbee offers competitive pricing in the mid-to-low range, more affordable than SerpAPI ($15) or Exa ($5) but slightly more expensive than Scrapingdog ($0.20).

Developer experience

Scrapingbee's documentation provides endpoint references, authentication guides, and code examples across Python, Node.js, Java, Ruby, PHP, Go, and C#. The request builder interface shows real-time credit costs and generates code in multiple languages as you configure parameters. Scrapingbee provides official Python and Node.js SDKs that wrap authentication and parameter formatting.

Setup takes approximately 5-10 minutes:

import requests

url = "https://app.scrapingbee.com/api/v1"

params = {

"api_key": "YOUR_API_KEY",

"search": "coffee",

"search_type": "search"

}

response = requests.get(url, params=params)

data = response.json()

for result in data.get("organic_results", []):

print(result["title"])

Developer experience score (7.0/10): Two official SDKs, comprehensive documentation, and a request builder with real-time cost estimation provide decent developer experience, though credits charged for failed requests create cost uncertainty.

Search capabilities

Scrapingbee's Google Search API provides access to Google web search only with structured JSON including organic results, local results, ads, related queries, images, news results, knowledge graph data, and top stories. Scrapingbee supports multiple Google search types including web, news, Maps, and image search. The platform provides dedicated scrapers for Amazon, Walmart, and ChatGPT API alongside general HTML scraping.

Search capabilities score (4.0/10): Google-only coverage limits Scrapingbee to a single search engine, significantly less than SerpAPI (20+ engines), Scrapingdog (4 engines), or the proprietary search capabilities of Exa and Tavily.

Free tier generosity

The free tier provides 1,000 API credits with no credit card required and no time expiration. At 15 credits per Google search, this translates to approximately 66 free searches. The 1,000 credits cover diverse use cases including HTML scraping (5 credits), Amazon scraping (15 credits), and Walmart scraping (15 credits), with all platform features available including JavaScript rendering, geo-targeting, and premium proxies at higher credit costs.

Free tier score (2.0/10): 66 total free searches is the least generous free tier among all tested APIs, limiting evaluation and making it difficult to thoroughly test the service before committing.

Choose Scrapingbee for unified web scraping

Scrapingbee fits projects requiring both SERP data and general web scraping in a unified platform. Teams already scraping e-commerce sites, social media profiles, or JavaScript-heavy web applications can consolidate Google search results through the same service. Projects with flexible latency requirements can tolerate 7.257-second average response times and high variability for data collection pipelines, batch processing systems, or research workflows prioritizing breadth over speed.

Skip Scrapingbee for dedicated SERP API needs. The 66-search free tier limits evaluation, Google-only coverage restricts multi-engine analysis, and 7.257-second average response times with 23-second worst-case performance make it unsuitable for real-time voice assistants or live autocomplete suggestions.

SerpAPI vs Tavily

SerpAPI and Tavily represent different approaches to SERP data. SerpAPI scrapes existing search engines like Google and Bing, returning their results as structured JSON. Tavily runs its own search engine that aggregates content from up to 20 sites and ranks it using proprietary AI. This architectural difference determines which one fits your use case.

Mirror Google or aggregate multiple sources?

When you query SerpAPI for Google results, you get exactly what appears on Google with all its ranking signals, personalization, and algorithmic biases. When you query Amazon, you get Amazon's product listings ranked by Amazon's algorithm. This matters for SEO monitoring, competitor analysis, and applications that need to replicate what users see on specific platforms. SerpAPI supports 20+ search engines with dedicated endpoints for each.

Tavily doesn't scrape existing engines. It aggregates and ranks content from multiple sources per query, scoring relevance with AI and formatting results for LLM consumption. You can't get "Bing results" from Tavily because Tavily is the search engine. This matters for AI agents and RAG applications where answer accuracy matters more than which search engine provided it.

Our benchmarks measured SerpAPI at 0.072 seconds average response time versus Tavily's 2.327 seconds. The 32x speed difference reflects Tavily's extra processing: searching up to 20 sites, applying AI ranking, extracting content, and formatting for LLM context windows. SerpAPI returns Google's results as-is with minimal overhead.

Choose SerpAPI when you need specific search engines

Build with SerpAPI when you need to replicate what users see on specific platforms. SEO monitoring tools that track keyword rankings across Google, Bing, and other engines require SerpAPI's multi-engine support. Price comparison tools need Amazon, eBay, and Walmart product listings. Academic research applications can use SerpAPI's Scholar endpoint instead of building separate scrapers.

The 0.072-second response time fits latency-sensitive applications like browser extensions, real-time dashboards, and user-facing search features. When your application needs to feel instant, the 32x speed advantage matters.

Choose Tavily when you're feeding LLMs

Build with Tavily when creating AI agents, RAG applications, or LLM-powered tools that need accurate information rather than specific search engine results. Tavily's 93.3% accuracy on SimpleQA and LLM-optimized formatting reduce the post-processing needed to integrate search into your agent's workflow.

The multi-source aggregation helps when you care about answer quality over result provenance. Instead of scraping Google's top result and hoping it's accurate, Tavily retrieves up to 20 sources and ranks them by relevance. If your application shows users "here's what I found about X" rather than "here are Google's top 10 results for X," Tavily's AI-scored relevance provides better raw material.

The 2.327-second response time becomes acceptable when the alternative is making multiple follow-up requests to scrape and parse content from traditional SERP results.

How to choose the right SERP API

SerpAPI works best when you need exact Google (or Bing, or Amazon) results with sub-100ms response times. The $15 per 1,000 searches pays for 20+ search engine coverage and infrastructure that handles CAPTCHA solving automatically. SEO monitoring tools and price comparison platforms that need traditional SERP layouts benefit from the 0.072-second average we measured.

Exa fits AI applications needing semantic search over a proprietary index. The $5 per 1,000 searches includes embeddings and meaning-based matching that keyword APIs don't provide. At 1.189 seconds average, Exa delivers the fastest semantic search among tested options. The 2,000 free searches make evaluation straightforward.

Tavily targets RAG pipelines requiring factual, multi-source content. The service aggregates up to 20 sites per query and formats results for LLM consumption at $5-$8 per 1,000 searches. The 1,000 monthly free credits that reset support sustained prototyping. Choose Tavily when accuracy matters more than speed.

Scrapingdog offers the lowest per-request pricing at scale, dropping to $0.000058 per request for high-volume users. Our 3.762-second average contradicted their claimed 1.25-second benchmark, but the cost advantage persists for budget-focused projects. The Universal Search API de-duplicates across four engines in single requests.

Scrapingbee makes sense for teams already scraping e-commerce or general websites who want to consolidate SERP access through one provider. The 5.411-second average and high variability make it unsuitable for latency-sensitive applications. At 66 free searches versus competitors offering 250-2,000, the free tier limits evaluation depth.

Frequently asked questions

What is a SERP API?

A SERP API provides programmatic access to search engine results through structured JSON responses. Instead of manually searching Google and scraping HTML, you send an HTTP request with query parameters and receive formatted data including organic results, ads, knowledge panels, and related searches. These APIs handle CAPTCHA solving and proxy rotation automatically.

Are SERP APIs legal?

SERP APIs operate in a legal gray area. In the United States, hiQ Labs v. LinkedIn (2019) established that scraping publicly accessible data doesn't violate the Computer Fraud and Abuse Act. Search engine terms of service typically prohibit automated access, but enforcement focuses more on technical countermeasures than legal action. SerpAPI offers U.S. Legal Shield on paid plans, covering legal fees if you face action for scraping public search data.

How do SERP APIs avoid getting blocked?

SERP APIs use rotating residential proxies, CAPTCHA solving services, and request pattern randomization to avoid detection. They distribute requests across thousands of IP addresses that appear as regular user traffic. When search engines implement new anti-bot measures, the APIs update their infrastructure to maintain access. This is why using an API makes more sense than building your own scraping system.

Can I use SERP APIs for commercial projects?

Yes, all five APIs support commercial use. Check your plan's terms of service for specific restrictions. Most APIs prohibit reselling raw SERP data to competitors but allow using the data in your own products. SerpAPI's pricing page and Tavily's documentation specify commercial terms. Free tiers typically allow commercial use with volume limitations.

Testing methodology

We tested each API with fifty standardized queries: "python tutorials", "best coffee makers 2025", "machine learning", "weather forecast" etc. The Python script measured response time from request to complete JSON parsing and verified successful responses. Each test ran once per API to simulate real-world usage.

import requests

import time

import os

import json

from dotenv import load_dotenv

from urllib.parse import quote_plus

load_dotenv()

def test_serpapi(query):

start = time.time()

response = requests.get("https://serpapi.com/search", params={

"q": query,

"api_key": os.getenv("SERPAPI_KEY")

})

elapsed = time.time() - start

return elapsed, response.status_code == 200

def test_exa(query):

start = time.time()

response = requests.post("https://api.exa.ai/search",

headers={"x-api-key": os.getenv("EXA_KEY")},

json={"query": query, "numResults": 10})

elapsed = time.time() - start

return elapsed, response.status_code == 200

def test_tavily(query):

start = time.time()

response = requests.post("https://api.tavily.com/search",

json={"api_key": os.getenv("TAVILY_KEY"), "query": query})

elapsed = time.time() - start

return elapsed, response.status_code == 200

def test_scrapingdog(query):

start = time.time()

response = requests.get("https://api.scrapingdog.com/google", params={

"api_key": os.getenv("SCRAPINGDOG_KEY"),

"query": query

})

elapsed = time.time() - start

return elapsed, response.status_code == 200

def test_scrapingbee(query):

start = time.time()

encoded_query = quote_plus(query)

response = requests.get("https://app.scrapingbee.com/api/v1/", params={

"api_key": os.getenv("SCRAPINGBEE_KEY"),

"url": f"https://www.google.com/search?q={encoded_query}",

"render_js": "false",

"custom_google": "True"

})

elapsed = time.time() - start

return elapsed, response.status_code == 200

queries = [

"python tutorials", "best coffee makers 2025", "machine learning",

"weather forecast", "electric cars", "react hooks tutorial",

"best restaurants near me", "data science courses", "yoga poses",

"home workout routines", "javascript async await", "docker compose",

"kubernetes tutorial", "aws lambda pricing", "terraform vs ansible",

"best noise cancelling headphones", "meal prep ideas", "sourdough recipe",

"running shoes 2025", "meditation techniques", "typescript generics",

"postgres indexing", "redis caching", "mongodb aggregation",

"git rebase vs merge", "vim shortcuts", "linux commands",

"css flexbox", "tailwind css", "nextjs routing",

"graphql vs rest", "microservices architecture", "api gateway",

"oauth2 flow", "jwt authentication", "password hashing",

"sql injection prevention", "xss attack", "csrf protection",

"seo best practices", "meta tags", "sitemap xml",

"google analytics", "conversion rate optimization", "a/b testing",

"email marketing", "social media strategy", "content marketing",

"project management tools", "agile methodology", "scrum vs kanban"

]

apis = {

"SerpAPI": test_serpapi,

"Exa": test_exa,

"Tavily": test_tavily,

"Scrapingdog": test_scrapingdog,

"Scrapingbee": test_scrapingbee

}

results = {}

for api_name, test_func in apis.items():

print(f"\nTesting {api_name}...")

times = []

successes = 0

for i, query in enumerate(queries, 1):

try:

elapsed, success = test_func(query)

times.append(elapsed)

if success:

successes += 1

print(f" {i}/50: {query[:30]:30s} - {elapsed:.3f}s - {'✓' if success else '✗'}")

except Exception as e:

print(f" {i}/50: {query[:30]:30s} - ERROR: {str(e)[:50]}")

times.append(0)

avg_time = sum(times) / len(times) if times else 0

success_rate = (successes / len(queries)) * 100

fastest = min(times) if times else 0

slowest = max(times) if times else 0

results[api_name] = {

"avg_time": round(avg_time, 3),

"success_rate": round(success_rate, 1),

"fastest": round(fastest, 3),

"slowest": round(slowest, 3),

"total_queries": len(queries),

"successful_queries": successes

}

print(f"\n{api_name} Results:")

print(f" Average: {avg_time:.3f}s")

print(f" Success Rate: {success_rate}%")

print(f" Fastest: {fastest:.3f}s")

print(f" Slowest: {slowest:.3f}s")

# Save results to JSON

with open("test_results.json", "w") as f:

json.dump(results, f, indent=2)

# Print summary

print("\n" + "="*60)

print("FINAL SUMMARY")

print("="*60)

for api_name, data in results.items():

print(f"{api_name:15s} {data['avg_time']:6.3f}s avg, {data['success_rate']:5.1f}% success")

We ran tests with a 100Mbps connection. Network latency impacts absolute times but relative performance remains consistent. Your results may vary based on location, query complexity, and API server load.